Table of contents

Open Table of contents

Intro

Did you know that Node.js is single threaded? This means that it can only fully use one CPU core1.

If you are running Next.js in a multi-core or multi-cpu environment, like a dedicated server, VM, or deployment in a Kubernetes cluster, instead of using Vercel or any other kind of edge / serverless deployment, you are wasting resources!

Use all the cores!

The solution is to run multiple instances of Next.js, each on a different CPU core. This is where PM2 comes in.

PM2 is a process manager for Node.js applications. It can run multiple instances of your application, while load balancing requests between them. It also has a lot of other features, like automatic restarts, logging, and monitoring. But even if you don’t need those extra features, like if for example you have K8s managing all that, it’s still worth using for its transparent multi-instance capabilities within the same machine or deployment.

Let’s see how to set up PM2 to run Next.js and make full use of all the resources of your server.

Install PM2

First, install PM2 globally. I recommend to also install pm2-logrotate so that the logs don’t grow indefinitely.

npm install -g pm2

pm2 install pm2-logrotateSet up the PM2 config file

Create a file called pm2.config.js in the root of your project, with the following content:

module.exports = {

apps: [

{

name: 'nextjs',

script: './node_modules/next/dist/bin/next',

args: 'start',

exec_mode: 'cluster',

instances: -1,

},

],

};This will start PM2 in cluster mode, as opposed to the default fork mode which doesn’t have load balancing sharing the same port, which is what we want.

By setting instances: -1, PM2 will start as many instances as there are CPU cores, minus one. This is a good balance, as it leaves a core available for the PM2 process manager itself and any other processes the system might need.

Running with PM2

Now start your Next.js app with PM2:

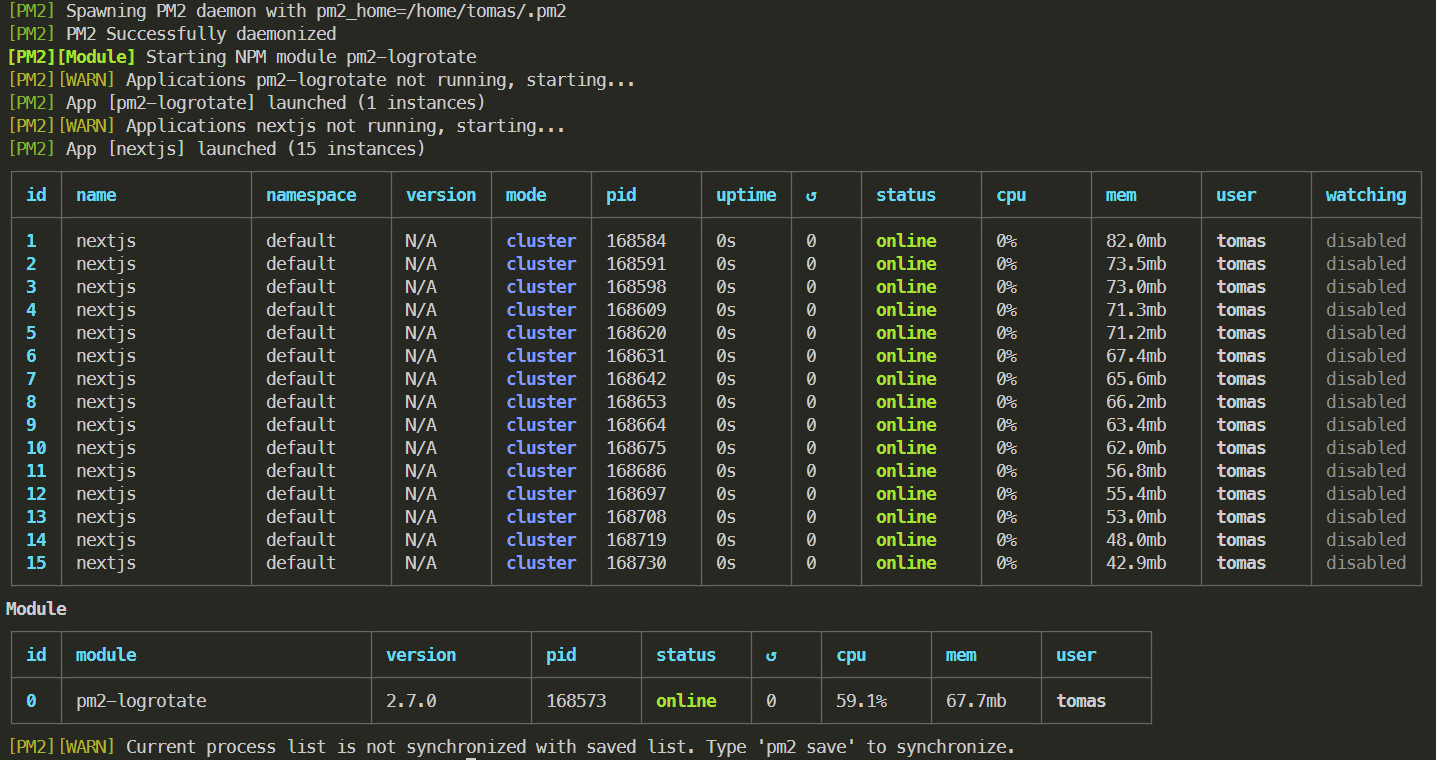

pm2 start pm2.config.jsAfter a few seconds, you should see a list of processes that PM2 has created for your app.

So many processes!

You can see this list again at any time with the pm2 list command.

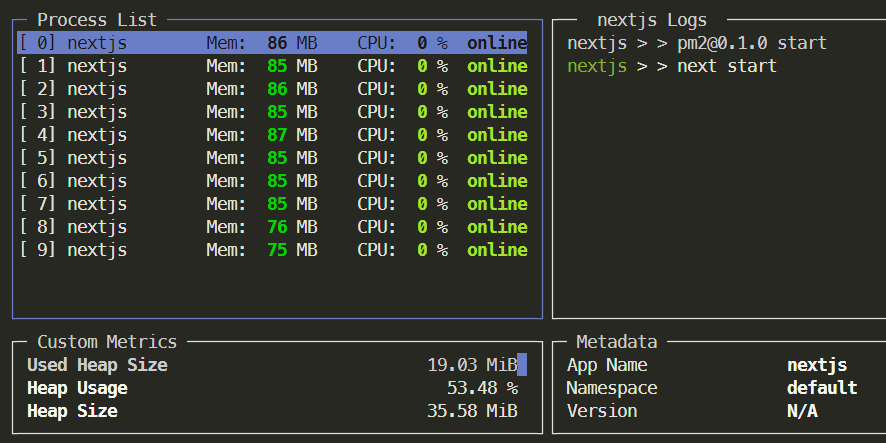

Another cool thing you can do is to continuously monitor your processes with pm2 monit:

Now test it out! Open your browser to localhost:3000 and your site should be served as expected. PM2 is actually routing the requests to different instances of your app, spreading the load, but this is totally transparent.

Benchmarks

But is it really any better, performance wise? Let’s run some benchmarks.

We will request the create-nextjs-app index page, but with dynamic = 'force-dynamic' to force Server Side Rendering and tax the server a bit more than just serving a static page.

These are the results of running siege with 1000 concurrent requests on a single instance of Next.js vs 10 instances of Next.js via PM2, for 60 seconds:

"transactions": 67790

"availability": 99.89

"elapsed_time": 57.30

"data_transferred": 688.32

"response_time": 0.64

"transaction_rate": 1183.07

"throughput": 12.01

"concurrency": 758.93

"successful_transactions": 67790

"failed_transactions": 75

"longest_transaction": 29.67

"shortest_transaction": 0.07For a single instance, we reach a concurrency of ~760 requests, which is not bad, but there were some failed requests, plus response time went through the roof with 30 seconds in the worst case. This is a clear sign of the server being overloaded.

"transactions": 148859

"availability": 100.00

"elapsed_time": 56.70

"data_transferred": 1502.32

"response_time": 0.38

"transaction_rate": 2625.38

"throughput": 26.50

"concurrency": 996.10

"successful_transactions": 148859

"failed_transactions": 0

"longest_transaction": 2.21

"shortest_transaction": 0.14With 10 instances, the concurrency reached nearly 1000, and it’s quite possible that it would support much more, just I could not test more than 1000 concurrent requests in my machine without having socket errors :).

Additionally, there were no failed requests, and the worst-case response time was just 2.2 seconds, compared to 30 seconds of the single instance!

The advantage of using PM2 to spread the load between multiple cores is clear.

Making it work in production

Running PM2 for development is not really necessary, you are perfectly fine with running a single instance of the Next.js server as usual.

Where PM2 makes sense is on a production deployment. Here is how to set it up based on the official Dockerfile from Vercel for Next.js:

1. Change the pm2.config.js file to run the standalone mode server

The recommended way to run Next.js in production is in standalone mode, which reduces the built application size by only including the necessary files and node_module dependencies. This is great to minimize the Docker image size, but it means there is a different way to start Next.js, so we have to adjust the PM2 config file:

module.exports = {

apps: [

{

name: 'nextjs',

script: './server.js',

args: 'start',

exec_mode: 'cluster',

instances: -1,

},

],

};2. Update the Dockerfile to install and run PM2

We have to add commands to install PM2 and logrotate, and change the command that starts Next.js to instead run PM2 using pm2-runtime.

It’s important to use pm2-runtime so the PM2 process stays in the foreground, as opposed to pm2 that runs in the background as a daemon, otherwise the Docker image would end its execution right away.

Change the end of the Dockerfile to:

...

RUN npm install -g pm2

RUN pm2 install pm2-logrotate

RUN pm2 update

COPY --from=builder --chown=nextjs:nodejs /app/pm2.config.js ./pm2.config.js

USER nextjs

EXPOSE 3000

ENV PORT 3000

# set hostname to localhost

ENV HOSTNAME "0.0.0.0"

# server.js is created by next build from the standalone output

# https://nextjs.org/docs/pages/api-reference/next-config-js/output

CMD ["pm2-runtime", "start", "pm2.config.js"]And that’s it! Now you can run Next.js in production as multiple load-balanced instances, fully utilizing the resources of your server. Enjoy the extra performance!

You can find a full example of this setup in https://github.com/tomups/nextjs-pm2

Footnotes

-

This can be circumvented by using worker threads, but that’s a topic for another day. ↩